Well folks, it’s been a minute. How’s your pandemic going? Hopefully safe and healthy.

This month’s blog party is brought to you by Lisa GB, and the topic is “What (else) have you learned by Presenting?” — either giving a talk at a big conference, a SQL Saturday or meetup, or (like me, because I’m still working my way up to it) just to your own little group of coworkers.

I don’t have that much presentation experience under my belt yet. After COVID started, I briefly tried my hand at Twitch streaming, only to walk away dejected and disillusioned because nobody watched. (Probably blame the content as much as the presenter, but whatever.) I did give a few “big room” talks at work last year, which went pretty well, and I got some great feedback, including helpful constructive criticism — talk slower, keep things on-topic and relevant to the work we’re doing, etc. All good stuff.

Lately, I’ve been doing brief “TSQL Tuesday” video-meetings with very small groups of colleagues (usually only 2-5 people show up any given week). We talk about whatever database-related points of interest have come up during our day-to-day grind. Sometimes it’s completely spur-of-the-moment. At least a few times, one of my stellar new BA’s (also QA/Tester), brought something to the table that even *I* wasn’t familiar with, like the cool IIF function in TSQL, or the EXCEPT UNION EXCEPT method for comparing homogeneous data-sets and the related concept of “bucket chemistry” with large buckets of data in preliminary analysis. (Sounds like a blog post that someone should write.)

Here are the things I’ve learned. Well, besides those mentioned above.

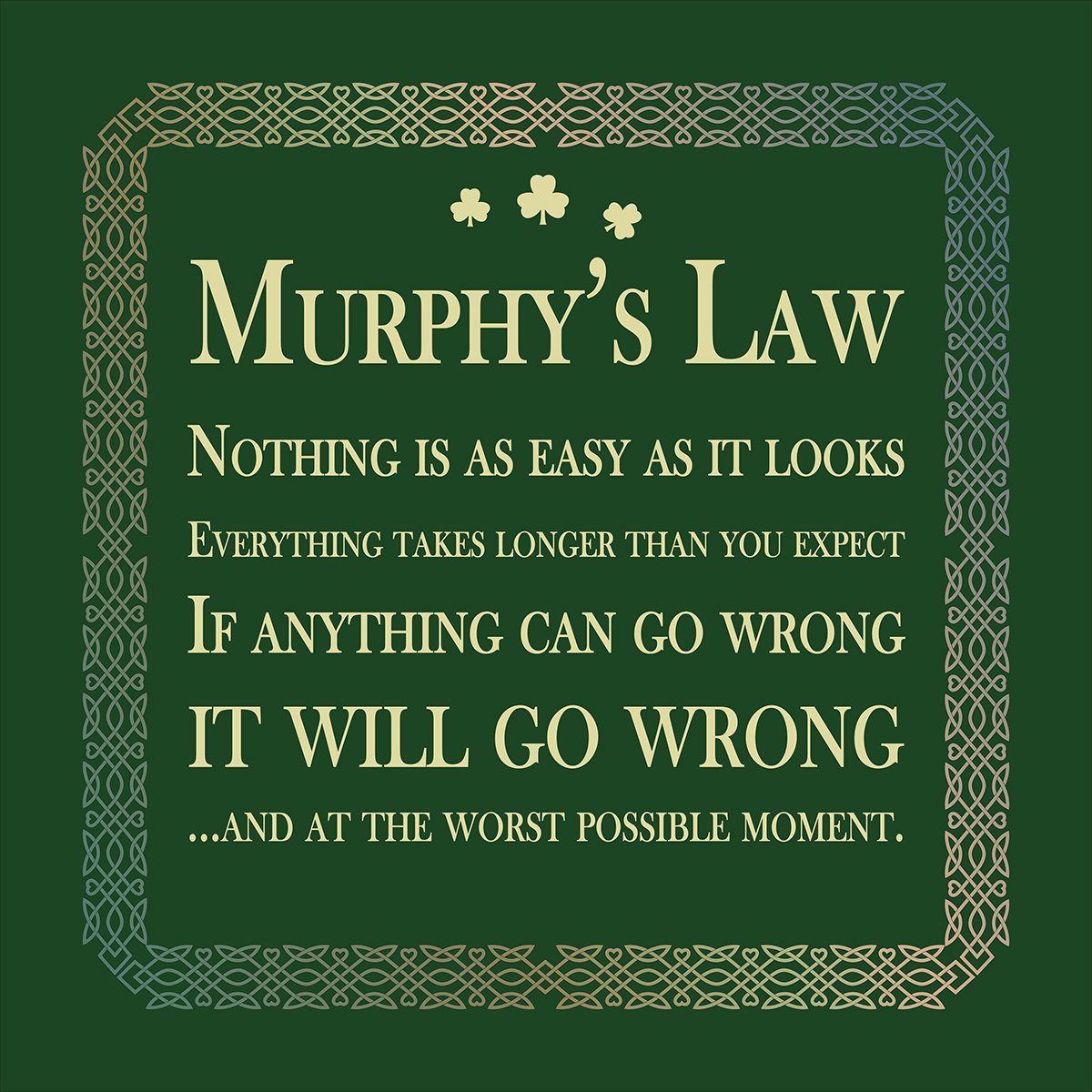

A. I really do talk way too fast when I’m presenting. Still. Trying to work on it.

B. I enjoy audience interaction much more than monologue-ing.

C. Never type in demos.

And finally, I’ll leave this as a teaser for a future post. While working on some demo material for “#TempTables – Use or Abuse?”, I got super confused about temp-table scope in context of stored-procs that abort or fail. So for a moment I understood why developers insisted on putting DROP TABLE #MyTempTable at the end of every one of their procs — it’s basically a safeguard, with no legitimate downside. Anyway, as I read into things and did a bit of research, I found this gem about how temp-tables are cached, and realized that I’d been committing a particular sin (select into #temp, followed by add columns for fetching more data) for a long time without realizing its risks.

Stay safe and healthy friends!

PS: there’s been a bunch of twitter on Twitter about PASS, people resigning left and right, and I’ve no idea what’s going on, but I’ll get the popcorn, cuz it’s sure to get entertaining. =D

It’s

It’s